What will art look like in a more technologically advanced world? Ubiquitous computing makes art more readily accessible to anyone–I can look at any a photo of any painting in history in mere seconds using google, and you need only walk outside to see that nearly everyone walks around with headphones playing music from all over the world. When art becomes accessible on a personal level, all people develop specific tastes for the pieces they like most, curating their own personal artistic experiences at the touch of a button. Shouldn’t it follow that art caters to this individualized experience? With a little code, all types of art have the potential to react to your taste as an observer, presenting itself to you in a way it knows will be more relevant to your life. Imagine exercise music that matches the beat of your footsteps as you run, sculptures that appeal to your artistic sensibilities and movies that change plots on the fly to give you the ending you’re craving. Such contextual art can have more influence on an audience and thus stretch thinking in a more significant way.

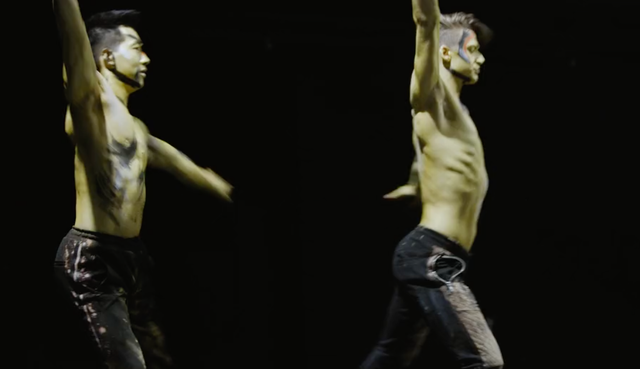

The Oscillations Dance Studio works with virtual reality (usually 360 video), dance and neuroscience to create pieces like this that are, among other goals, more stimulating to the audience than traditional experiences on an individualized level. The dance pieces they design can measure your brain’s stimulation using Brain-Computer Interface methods that allow the team to evaluate a user’s interest and then modify the piece live to keep the user engaged. They’re not the only ones. Other examples of work in the artistic field of neuro or bio-feedback (art that has access to the information in your brain and body) include Nevermind, a horror video game that learns and adapts to a user’s fears by monitoring heart rate and MindLight, a children’s mindfulness education tool that adapts to a child’s attention.

While they aren’t looking into quite as much personal information ust yet, Oscillations first showed the Knight Lab Oscillations team a dance piece called Fire Together, which implements some of this technology in a more basic way. They track eye data to know whether or not a user is looking at the intended spot in a virtual reality experience. If so, the piece moves to its next ‘scene.’ If not, audio cues help guide the user to the right area. For instance, the piece begins outside a dance hall where the sound of applause, spatially linked to the theater in virtual reality, grows louder the longer you look away. Then, once you look in the right spot, you’re transported inside. This way, Oscillations can design experiences knowing that you’ve seen everything you’re supposed to see.

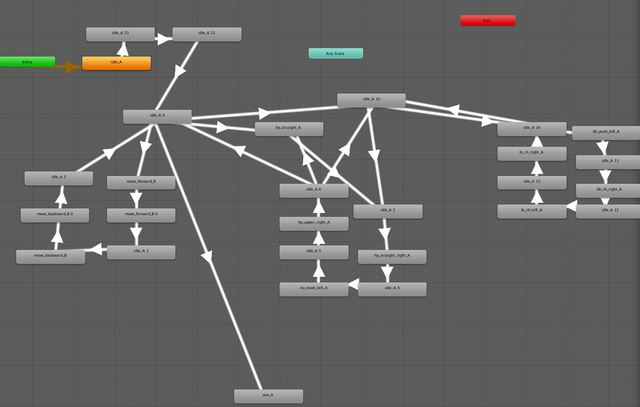

Just as Oscillations is currently most interested in using technology to improve a user’s experience of an entire dance piece, the Knight Lab has taken an interest in further examining that intersection between dance and tech, and in the potential applications for the more broad sandbox of storytelling with technology. As a result, multiple teams have worked on the project over the past two years, each building on the research of the last. So far, teams have attempted to take motion capture footage of dance performances and splice up the resulting files into rearrangeable chunks. In theory, the dance could then be dynamically sequenced according to some user input. This idea allows for the possibility of a dance performance that shows you the entire piece in the order it thinks you’ll find most enjoyable. The team used Unity as a medium for linking the chunks together, using the idea that a video game engine would best power this kind of user interaction later down the road.

The animation files proved to be the most troublesome aspect of that project, as the motion capture technology spit out a file type with relational position data rather than independent values. For animations, this idea makes sense: every XYX coordinate of a particular point depends upon its previous location after some transform (the animation) is applied, especially when a designer can move an object all around a 3D space in Unity and expect the animation to look “the same,” but in a different location. However, the dependency on prior position meant that unexpected problems occur visually when splicing a full animation into chunks. How can we smoothly transition from chunk 4 to chunk 6 when chunk 6 is expecting the body to be in its position from the end of chunk 5?

The previous team solved this problem by manually fixing any visual glitches within transitions in an animation where the order of individual dance moves had been reordered. Dynamic sequencing, where the computer is generating brand new orders of dance moves from the provided chunks, would therefore not be possible without a thorough algorithm for generating the kinds of transitions the previous team made by hand.

When the current Knight Lab team first researched the various file types for animation, we worried that the ins and outs of particular technology could bog us down for years rather than allowing us to explore the true goal of the Oscillations project, which we initially boiled down to: How can we make reactive art? (As we near the end of the project, I think the question we were really exploring was: What can reactive art do well, and what are the necessary considerations when designing it?) We figured that the technological issues the previous group faced are solved with time, and that we’d learn more about the future space those solutions will enable if we created a simplified, reactive prototype.

At first, we looked for stand-in parallels for the Oscillations team’s work. We pulled a basic kung-fu animation asset from Unity as a substitute for a dance. We simulated “Brain-Computer Interaction” by measuring the time between button presses. We created a basic animation table with three stages or ‘chunks,’ which could flow directly between each other in any order seamlessly. The determining factor of these transitions was the tempo the user’s button presses created, which also directly influenced the speed of the animation.

We first measured button-presses, but it’s not a large jump from there to other input methods with a rhythm. For instance, if we were measuring a user’s heartbeat, a few lines of code could translate that heartbeat into a tempo and allow it to interact with our animation in the same way. This idea was, at first, our inspiration. We wanted to create an experience that felt like it reflecting the user. Music came to our mind immediately as an important aspect in making the experience feel tangible in this way, so while part of the team worked on composing custom music to this effect, the rest put in a placeholder track that could mimic the hectic, panic-inducing heartbeat of a fast tempo and the dull, dragging, calm beat of a slow tempo.

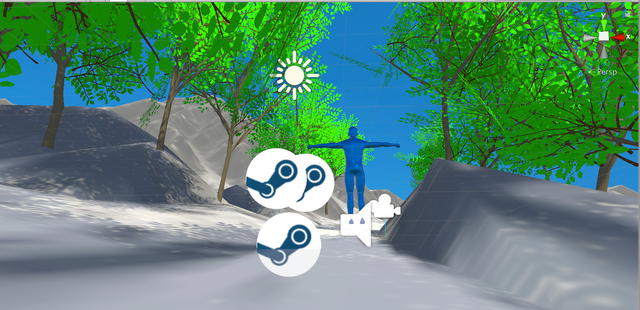

If the user is meant to be intimately experiencing an art piece that is a reflection of whoever decided to play, then we wanted them to be acutely aware of their impact on the piece with as few distractions as possible. The piece, therefore, must be immersive. Like the Oscillations team, we decided VR was the appropriate medium to achieve this goal. VR enabled us to add a more logical interaction system than a button press in the form of a drum-hitting gesture with the motion controls, which we implemented next.

From here we brainstormed and implemented a number of ideas to ‘improve’ the experience, but each seemed disconnected from the piece in a negative way. We were layering additional environmental factors to our piece that loosely depended on the original interaction system, but they seemed out of place and disjointed. The idea of a user feeling agency and understanding the way the piece reflects them returned to us as an important cornerstone. The next round of updates featured a second interaction system running perpendicular to the first, but in a logical way. The user feels a bit like the conductor of an orchestra as they tap their hand and change the tempo of song, so we wanted to lean into that idea and give their other hand an interaction that felt like a conductor. Changing distance between the two hands was an easily measurable metric that allowed us to implement more reactive elements that were responding to something new rather than overwhelming one input.

Unity has a rapidly-evolving infrastructure for VR integration, so many of the tutorials we tried to follow were severely outdated. We were using an HTC vive, and were initially under the assumption that accessing the controller’s input data directly (particular button presses, accelerometer data, etc.) would be easy. However, it appears as though SteamVR generalized the controller system for Unity – rather than referring to specific buttons on a particular type of controller, a content creator refers to types of interactions (i.e. “Grabbing” as opposed to “Trigger button”). An interface within Unity then allows a user to loosely map certain controller functions to those interactions, but the process of doing so is somewhat obfuscated. We bypassed this system by using the position data of the Game Objects the controllers were contained within. For a more immersive experience, future project continuations may wish to delve deeper into this system.

In addition to the an art piece’s immersiveness, we found that its logic or intuitiveness had significant importance to its audience. We initially were simulating a simplified version (button presses / VR controller) of a deeper method of interaction (heartbeat, etc.) and felt that the input method was virtually interchangeable. We realized upon trying the experience that the specific interaction method had to be a larger consideration for designing what was being reactive. For instance, a user has to understand intuitively why they should be whipping a controller up and down. Creating an experience in which the user is an orchestral conductor gives meaning to this motion: the controller is like the conductor’s stick, and suddenly the user has a frame of reference for what to expect from their actions. A basis then exists for the “rules” of the interaction.

An art piece that truly allows the user agency, however, probably has to remove the idea of “rules” altogether. The more rules that exist in an experience, the more likely it is that multiple users have the same experience. “Generative” art is entirely unique to an individual audience member, involving a sophisticated code that produces different results every time. When interaction and generation blend together, a user’s every action influences the art piece in an entirely unique way that allows them to have true agency over the experience and, ultimately, to feel more connected to it.

Moving forward with the Knight Lab’s reactive animation project, there are four key factors to improve upon: its immersive, generative, intuitive, and interactive qualities. We want to make a piece that feels all-encompassing to your senses, creating individual experiences that are totally driven by numerous, logical points of interaction. Then, we can start thinking about how to blend the experience with live performance. Oscillations Dance Studio wants to live in the center of that blend, and we ought to work from both sides so that we can meet there, in the middle, where an audience feels they have agency over a performance that was tailored just for them, and thus allows them to get the most out of the experience.