In Spring of 2020, one of the Knight Lab Studio teams focused on exploring how Augmented Reality can be used to create experiences and tools within journalism and food media. They identified three major categories of experiences. Later this summer, we’ll be sharing their work on experiences focused on entertaining and connecting audiences. This week we’re sharing what they learned while building experiences intended to educate and inform.

Making Information Available in the Right Place with Image Tracking

AR image tracking can help with contextualizing written works and increasing reader understanding.

One of the most easily apparent ways that AR can add value is by making information appear in the context where it’s most useful so we began by utilizing AR image tracking to design educational scenes. At a basic level, image tracking provides the ability to detect 2D images and trigger the appearance of contextual, responsive AR content. The examples we created revolved around a specific food (ramen), but the image tracking functionality can be applied to many different scenarios. Implementing image tracking involves a few short steps: taking a picture of the item you wish to annotate (such as an article or menu), uploading the image from your camera roll into an AR platform, and anchoring the AR scene to the image. All of the objects placed in the scene thereafter will reorient to this new anchor; that is, any models or graphics you add to the scene will be displayed in the space above the image, creating convenient “AR annotations.”

While the concept of image tracking is relatively simplistic, its utility is quite significant. Its main function is to provide users with information that complements the material they are reading. By scanning printed materials with their smartphone’s camera, users can trigger the appearance of virtually augmented content. This content can come in many forms, including pictures, graphs, and 3D models, but it has one purpose: to teach. Studies have shown that visual aids are significant assets to the learning process. Adding visuals to articles and research papers that are often filled with industry-specific jargon can help the user remain engaged with what they are reading and make content easier to digest. This was the inspiration behind what we created for the crux of our project.

Before we reached that point of contextualizing articles, however, we started off with a more simplistic venture. Using image tracking, we created an experience that allows restaurant patrons to see what a restaurant dish looks like before ordering. The customer can scan a dish on a restaurant menu using Torch, and a 3D model of the dish will appear. Since visual appeal (i.e. food that “looks good”) is often a large part of deciding what to eat, we thought that this AR experience would be a desirable addition to any restaurant menu.

Our second (and preeminent) experience involved two components: an annotated model and an article about the rise of ramen. One section in the article detailed the different elements that typically constitute a bowl of ramen. The author included words such as chashu and nori, which may be unfamiliar to amateur ramen consumers; thus, we saw this as an opportunity to integrate image tracking. We created an experience where, after scanning the corresponding section of the article in Torch AR, the reader will see an annotated model of a ramen bowl pop up. The reader will now have a visual representation of ramen at their disposal, with all of the ingredients labeled. Now that the reader can actually visualize what the author is referring to, they can better understand and follow along with the article. One issue we had when implementing image tracking involved the model always remaining in view, even when the corresponding material was no longer in view. The whole point of image tracking is to provide information in context; when the material disappears, so should the related model. We mitigated this by creating two separate scenes within Torch AR: one with the annotated model and one without. If Torch detects the relevant article section, it toggles the first scene and displays the model. If the relevant article section is nowhere to be found (i.e. the page was turned or the article was put away), Torch then selects the second scene and the annotated model is removed from view. Note that, in comparison to our other journalistic experience detailed in the next section, this article annotation method is meant to serve as a quick information reference rather than a complete, culturally immersive experience.

Building Exploratory Sensory Experiences

AR offers creators the tools to build intimate and culture-specific environments. Additionally, it can offer audiences visually-pleasing and exploratory experiences that promote active self-learning.

Briefly, why we did what we did:

We prototyped an interactive Torch AR experience to supplement an existing New Yorker journalistic piece that introduces the history of ramen to its readers. Before delving further into details, let us first touch briefly on three reasons behind this decision that we came to after doing some research.

- Articles that focus on a particular food’s culture and history often do so with a large amount of text, rarely using visuals or video

- Articles or magazines that critique food texture and smell sometimes employ jargon that readers are unable to relate to.

- Articles that elaborate on food popular in a certain culture might use culture-specific words that outsiders will have no context to understand

Thus, we concluded that there is a gap to fill in this field that spotlights food, which is on its own heavily defined by how it looks.

What did we build?

Scene 1 Establishing the shot

Visuals

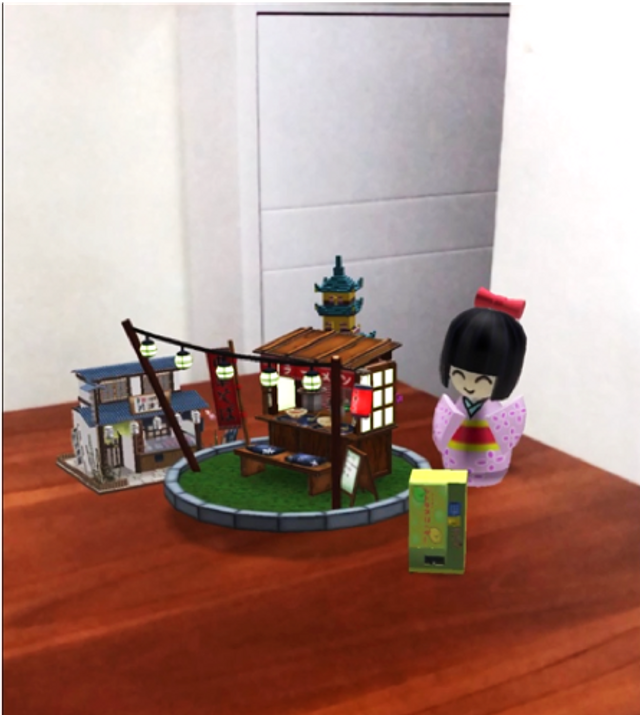

We used 3D models on SketchFab that you can directly drag and drop into your scene in Torch AR. These models are storytelling tools that help users establish, at a glance, that ramen either originated from Japan or has been heavily associated with Japan.

Audio

We started off the scene with a friendly Konichiwa (which means “hi” in Japanese). This is then followed by a voiceover sourced from a YouTube video created by ATTN: on the history of ramen. Also, in order to create an inviting and authentic atmosphere, we overlaid a delicate Japanese instrumental piece for the entire experience.

Interaction

Triggered to Scene 2 when user taps anywhere on his/her screen (to acknowledge a general scene change)

Scene 2 Focusing on the subject

Visuals

As the user enters this scene, a Japanese porcelain doll appears. We also made the central ramen noodle stall rotate to highlight the focus of this experience – ramen.

Audio

We integrated Japanese restaurant ambience that was delightfully decorated with sparse commentary from the stall owners and customers. This signals to the user that he/she is in a Japanese stall and that the ramen-eating experience sounds like so.

Interaction

Triggered to Scene 3 when user taps on the ramen noodle stall (subject focus)

Scene 3 “Zooming in” to ramen

Visuals

The Japanese porcelain doll scales up and in front of it, is a 3D photogrammetry model of a bowl of ramen. This model is photorealistic as it is scanned from an actual bowl of ramen.

Audio

We used another voiceover part from ATTN:’s video to start the scene as it supplies information about ramen in a visually appealing way – the user gets to see the ramen while listening to the voiceover.

Interaction

Triggered to scene 4 when user taps on the bowl of noodle (to see it in detail)

Scene 4 Isolating the bowl of ramen

Visuals

The porcelain doll returns to its original size while the bowl of ramen scales up to the front of the user’s camera, which indicates to the user that this scene was solely designed to spotlight the bowl of ramen. They can look at it for as long as they like.

Audio

Japanese music + restaurant ambience

Interaction

Users can rotate, position and scale only the bowl of ramen however they like.

How can AR enhance the experience?

As food holds much cultural meaning, we want to stimulate the reader’s interest by transporting them into a new culture and environment in the comfort of his or her own homes. With AR, he or she would be comfortably exposed to knowledge that might have seemed foreign and therefore, intimidating.

He or she will play an active role in learning and interacting with new cultural information, which sets a stark contrast to a more passive experience delivered through text or video. Additionally, cultural content cannot be fully understood or learnt through just words or sounds alone. AR, compared to other media, combines different media and is able to replicate more accurately and holistically what culture represents and feels like.

Thus, not only can this novel experience appeal to people’s sense of curiosity, it also encourages them to conduct their own research on the foreign environment in their own time.

What does this mean for the future?

For now, audio capabilities for AR are limited – it is difficult to create a layered soundscape that tells as much of a compelling story as interactive 3D models. One way to work around this is to embed audio (e.g. Japanese music) in the article, and not include it in the experience. The pro of doing this is that the experience would be less taxing on the user’s phone; the con being that you can’t layer and align multiple music or sound effects to the AR experience.

While this is the case for now, AR still adds value to a word-heavy food journalism article as it offers an alternative experience to users who might find themselves lost in a text-cluttered article. In a world fueled by an increasing amount of visual experiences, it takes a lot more to catch the audience’s eye now than in the past. Hence, having an AR experience in an article is akin to having an effective marketing advertisement for the article.

Something to ponder on…

If AR presents such unique content-building opportunities for creators, why haven’t more people adopted it? It’s because AR as a content tool, on its own, is far from being on par with other media such as films and text. It is instead mostly used as a supplement to the latter, as is the case here. On one hand, industry AR content companies are uncertain of the AR tools to invest research in due to a lackluster amount of brilliant AR content. On another hand, users are not keen to use AR tools because of its limited tools and potential learning curve. The trade-off between their time and AR’s functionalities just does not seem worth it.

How do we navigate the path forward for AR then? Perhaps, companies and AR content creators could collaborate more intimately together? Perhaps, AR could first be thought of as a supplemental tool for storytelling? There are too many possibilities and uncertainties here, which is what makes this budding industry intriguing. With the right drive and creative direction, AR creators – companies and individuals – could pave a radical new way for how the world consumes content.